🌟 Introduction: The AI Revolution That’s Changing Video Forever

In 2025, one name has taken the entire internet by storm — OpenAI Sora.

If you’ve scrolled through Twitter (X), YouTube Shorts, or Reddit, chances are you’ve already seen hyper-realistic videos that look like Hollywood-level CGI… only to find out they were created by AI.

That’s the magic of Sora. 💡

OpenAI Sora isn’t just another text-to-video generator — it’s a full-blown AI filmmaking engine that lets you turn imagination into cinema-quality clips within seconds. From creating advertisements, music videos, VFX scenes, and animated stories, Sora has become a creative playground for everyone — from YouTubers and filmmakers to marketers and content creators.

But what makes it so special?

Let’s dive deep into everything you need to know — from how Sora started, how it works, and how you can master it like a pro.

🧠 What Is OpenAI Sora?

Sora is OpenAI’s AI video generation model, officially launched in February 2024. It can generate realistic videos from text prompts, similar to how ChatGPT writes text or DALL·E creates images.

You simply describe a scene — for example:

“A cinematic drone shot of a mountain with waterfalls, sunrise in the background, birds flying across the sky.”

And within seconds, Sora turns that sentence into a high-quality 1080p or even 4K video with motion, lighting, and depth — something that previously required an entire production crew.

Sora is based on diffusion models and transformer architectures, combining text-to-image generation with temporal motion synthesis — meaning it doesn’t just understand what should appear, but how it should move.

It’s trained on a massive dataset of video frames and textual descriptions, allowing it to understand both spatial and temporal information.

💥 Why Sora Is a Game-Changer in 2025

There are many AI tools in 2025 — Runway, Pika, Synthesia, and others — but none have reached the realism of Sora.

Here’s why creators call it the “ChatGPT moment for video.”

1. Unmatched Realism

Sora generates videos that look truly cinematic — with real lighting physics, depth perception, and character movement.

2. Natural Camera Motion

Unlike older AI tools, Sora creates scenes with realistic pans, zooms, and handheld effects — giving your clips that authentic camera look.

3. Scene Continuity

Sora remembers what happens across frames, maintaining object positions, character consistency, and motion flow.

4. Custom Style Adaptation

You can tell Sora to mimic the look of anime, Pixar, documentary footage, or film noir — it adapts perfectly.

5. Zero Technical Barriers

No editing skills? No problem.

Sora lets you create videos just by describing them in plain English.

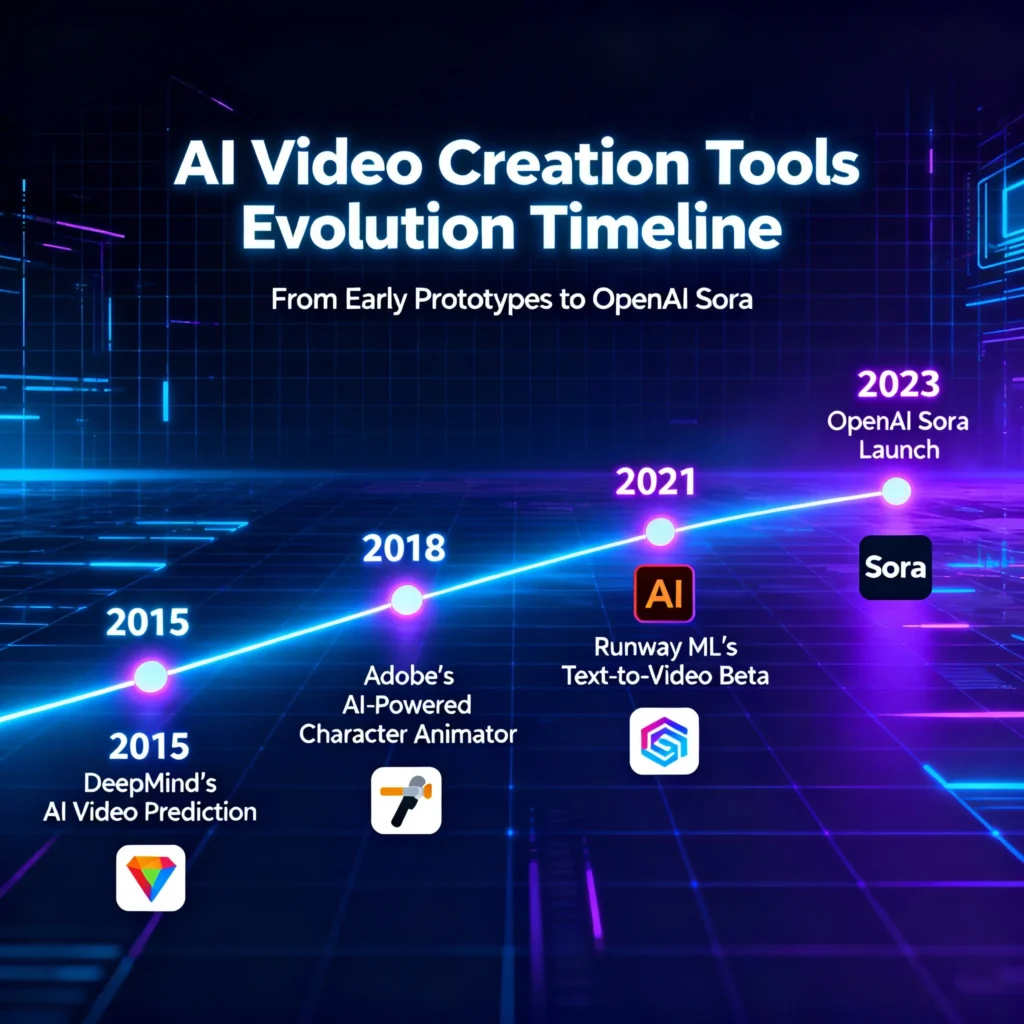

🎞️ The History: How We Got to Sora

Before Sora, the AI video generation journey had several important milestones:

| Year | Technology | Description |

|---|---|---|

| 2021 | DALL·E | First OpenAI model to generate images from text. |

| 2022 | Stable Diffusion / Midjourney | Brought artistic-level image generation to the public. |

| 2023 | Runway Gen-2 | Early text-to-video generation with limited realism. |

| 2024 | OpenAI Sora | Revolutionized AI video with realistic motion and scenes. |

| 2025 | Sora v2.0 | Multi-character interaction, better storytelling, and extended video lengths. |

Sora represents a shift from “AI as a helper” to “AI as a creator.”

It’s not just a tool — it’s a new era of visual storytelling.

🎬 The Rise of AI Video Generation

Before we get into Sora’s tech, let’s understand the larger revolution it belongs to.

🔹 The Global Demand for Video

Video now makes up over 85% of all internet traffic.

Brands, educators, and influencers all rely on video to reach their audiences.

But professional video creation is expensive — you need:

- Cameras 🎥

- Actors 👩🎤

- Lighting setups 💡

- Editors and VFX teams 💻

That’s where AI stepped in — turning imagination into pixels.

🔹 The Evolution of AI Tools

AI started with generating static content:

- Text (GPT models)

- Images (DALL·E, Midjourney)

- Voice (ElevenLabs, Play.ht)

- 3D & animation (Luma AI)

Now, video is the next logical step — combining all forms of AI creativity into one.

🔹 Why OpenAI Dominates

OpenAI was already leading with ChatGPT, Whisper, and DALL·E.

Sora simply connects the dots — using GPT’s understanding of text and DALL·E’s image synthesis to generate intelligent motion.

🧩 How Sora Differs from Other AI Video Tools

Let’s break down where Sora stands compared to competitors:

| Feature | Sora (OpenAI) | Runway Gen-2 | Pika Labs | Synthesia |

|---|---|---|---|---|

| Text-to-Video Quality | Ultra-Realistic | Semi-Realistic | Stylized | Avatar-Based |

| Camera Movement | Yes | Partial | Basic | No |

| Sound Integration | Not yet (coming) | No | No | Yes |

| Length Limit | 1–2 mins (2025 beta) | 10–15 secs | 8–12 secs | 2–5 mins |

| Realism Score | 9.5/10 | 7/10 | 6.5/10 | 5/10 |

| Best Use Case | Creative filmmaking | Short clips | Animated stories | Talking-head videos |

Sora’s realism and long-form storytelling ability give it an edge. It’s the first AI that can simulate full movie scenes — not just short, looping animations.

⚙️ Inside the Tech: How Sora Works

Sora is built on transformer-based diffusion models — the same foundational concept behind ChatGPT’s text understanding, but applied to moving images.

Here’s a simplified breakdown:

- Prompt Understanding:

GPT-like model parses your text, identifying scene objects, actions, lighting, mood, and camera direction. - Frame Prediction:

Diffusion model starts generating initial frames from noise, step by step improving detail and consistency. - Temporal Consistency:

Another layer ensures objects and lighting remain consistent across frames (motion tracking). - Scene Rendering:

The system stitches frames into smooth motion, simulates depth of field, and applies lighting physics. - Final Output:

Video is post-processed for stabilization, cinematic color grading, and aspect ratio correction.

🔍 Prompting: The Secret Skill of Sora Pros

Just like writing great prompts for ChatGPT, prompting is the key to unlocking Sora’s best results.

Here are some pro prompt structures that creators use:

🎬 “A cinematic aerial shot of Tokyo at night, neon lights reflecting on wet streets, cars moving in slow motion, depth-of-field focus, 4K realism.”

🌲 “A cozy cabin in snowy mountains during sunset, smoke rising from chimney, slow zoom-out, warm lighting.”

🔑 Pro Prompt Tips:

- Use camera terms (pan, zoom, aerial, close-up).

- Add mood & lighting (golden hour, neon, moody).

- Mention frame rate & style (24fps cinematic, anime, Pixar).

- Keep prompts descriptive but concise (15–25 words works best).

🧩 Why Everyone’s Talking About Sora

Sora isn’t just a video tool — it’s a new medium.

It allows you to think visually, turning creativity into motion faster than ever before.

Content creators on YouTube are already using Sora to:

- Generate short films 🎞️

- Explain concepts visually 🧠

- Create AI documentaries 🌍

- Animate storybooks for children 📖

Marketers use it to:

- Make ad videos in minutes

- Visualize products before launch

- Create personalized campaigns

Teachers and trainers use it for:

- Educational explainer videos

- Historical recreations

- Scientific visualizations

The possibilities are infinite. ✨

🧩 The “Sora Effect” on the Internet

Since its release, Sora has sparked massive social media trends.

On Twitter (X), creators post “Guess if it’s real or AI?” clips — and even experts can’t tell the difference.

On YouTube, thousands of new Sora-based channels are appearing, focusing on:

- AI Film Challenges 🎥

- Visual storytelling tutorials 📚

- AI Cinematography experiments 🌅

Even Hollywood has taken notice — early adopters are exploring Sora for previsualization and concept scenes.

How OpenAI Sora Works & Step-by-Step Guide for Video Creators

⚡ Key Features of OpenAI Sora in 2025

The 2025 version of OpenAI Sora isn’t just an upgrade — it’s a revolution.

While the first beta shocked creators, the new update makes Sora a full-fledged AI filmmaking suite.

Let’s explore its top features 👇

🎬 1. Text-to-Video Generation (Ultra-Realistic)

At its core, Sora can turn text descriptions into realistic videos in seconds.

You simply type what you imagine — and it transforms words into motion.

Example:

“A cinematic drone shot of a futuristic city skyline at golden hour, flying cars zooming past.”

In under a minute, you’ll get a breathtaking cinematic video.

Sora understands camera angles, light intensity, and emotional tone.

🧩 2. Long Video Generation (Up to 2 Minutes)

Older AI tools could only create 10–15-second clips, but Sora can generate videos up to 2 minutes — a huge leap for storytelling.

This enables:

- Short films

- Explainer videos

- Ads and marketing content

- Educational visuals

Each frame is crafted with temporal consistency — meaning objects and motion remain natural across the timeline.

🪄 3. Multi-Character & Object Interaction

The latest model supports multiple subjects interacting in one scene.

Example:

“Two people sitting at a café table, sipping coffee, laughing, and looking out the window as it rains.”

Sora handles body language, eye contact, and gestures — something no other AI can match.

🎨 4. Style Customization & Cinematic Control

You can now choose your preferred cinematic style:

- 🎥 Realistic film

- 🧚 Fantasy animation

- 🎨 Anime

- 🕶️ Noir / Vintage

- 🎞️ Documentary tone

With prompt-based customization, you control:

- Color grading

- Lighting mood

- Lens type (e.g., 50mm cinematic, fisheye, drone cam)

- Frame rate & resolution

🔊 5. Audio Intelligence (Coming Soon in Sora v2.1)

While current Sora focuses purely on visuals, OpenAI is testing AI audio synchronization — allowing sound effects, ambient music, and lip-sync with generated voices.

This will make it a one-stop AI video production system.

🧠 6. Contextual Memory

Sora doesn’t just render random frames — it remembers context.

If a scene includes a character picking up a cup in one frame, that cup stays consistent throughout the clip.

This “contextual persistence” makes its videos nearly indistinguishable from real footage.

🌍 7. Multi-Scene Story Generation

Now you can generate multiple connected scenes from one prompt:

“Show a man waking up, walking to his car, and driving into the city.”

Sora creates scene transitions automatically, just like a movie editor — including pans, zoom-ins, and fade effects.

🧩 8. Integration with ChatGPT & DALL·E

OpenAI has connected Sora with ChatGPT and DALL·E through their ecosystem.

That means you can:

- Use ChatGPT to script your scene

- Generate visuals in DALL·E

- And render the entire sequence with Sora

This makes the workflow fully AI-powered from concept to creation.

🔍 How OpenAI Sora Works (Simplified Tech Breakdown)

Understanding the tech helps you prompt better and get higher-quality results.

Sora uses a hybrid of:

- Diffusion models (for pixel-level image synthesis)

- Transformer models (for temporal understanding of sequences)

Let’s break it down simply 👇

🧩 Step 1: Text Understanding (Prompt Analysis)

Your prompt is first passed to a GPT-like text model which understands:

- Scene type

- Lighting

- Objects

- Emotions

- Camera movement

For example, “sunset drone shot of mountains” = environment + lighting + motion.

🧩 Step 2: Scene Layout Planning

Sora generates a semantic map — basically a 3D-like blueprint of what should appear where.

This includes object depth, distance, and scale.

🧩 Step 3: Frame Generation via Diffusion

Like Stable Diffusion, it starts with random noise and “denoises” frame by frame until a full realistic image forms.

🧩 Step 4: Temporal Frame Alignment

Here’s the secret sauce 🧠 — Sora adds temporal layers that ensure consistency between frames, preventing flicker or motion jitter.

🧩 Step 5: Post-Processing

Finally, color grading, stabilization, and cinematic effects are applied automatically.

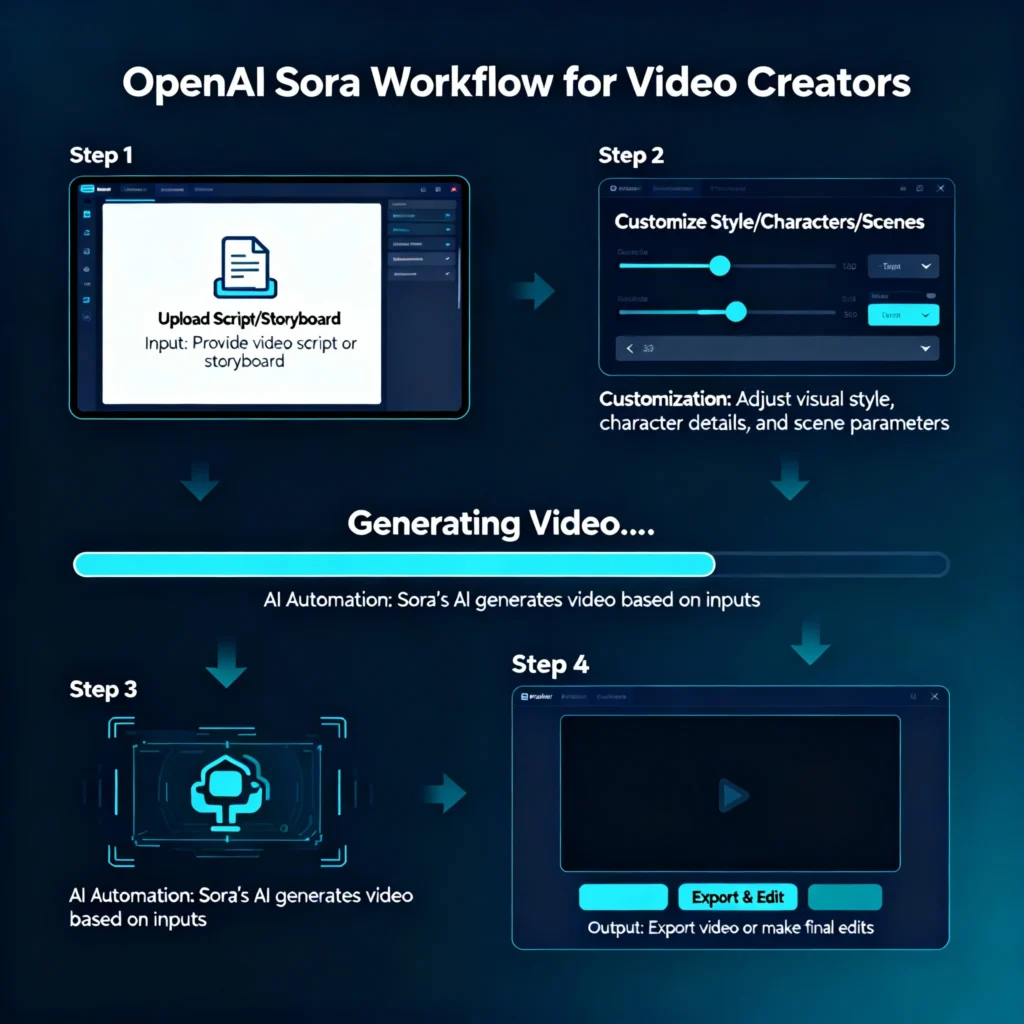

🛠️ Step-by-Step Guide: How to Use OpenAI Sora

Now that you understand how it works, let’s walk through how to use it like a pro 👇

🪄 Step 1: Access the Sora Platform

Currently, Sora is available to selected creators and beta users through OpenAI’s platform.

You can join the waitlist via OpenAI’s website.

When it becomes public, you’ll access it via a web-based dashboard, similar to ChatGPT or DALL·E.

🎬 Step 2: Write Your Prompt

This is the most important step.

A good prompt = a professional-looking video.

Example Prompt:

“A cinematic close-up shot of a woman standing on a cliff during sunset, wind blowing her hair, dramatic lighting, 4K resolution.”

Pro Prompt Tips:

- Add camera type (drone, handheld, wide-angle)

- Add lighting & mood (sunset, neon, foggy)

- Mention video style (cinematic, documentary, animation)

- Specify length (10s, 30s, 60s)

🧠 Step 3: Choose Style & Settings

In Sora’s interface, you can select:

- Aspect ratio: 16:9, 9:16, 1:1

- Style: Realistic, animated, artistic

- Resolution: 1080p or 4K

- Frame rate: 24fps, 30fps, or 60fps

🖥️ Step 4: Generate & Preview

Click Generate Video, and Sora starts rendering in real-time.

You’ll see progress bars and preview snapshots during the generation process.

Once done, you can download it in MP4 or MOV formats.

🎨 Step 5: Fine-Tune Results

Sora allows post-edit tweaks:

- Adjust color tones

- Crop unwanted scenes

- Extend duration

- Add titles or overlay text

These make your final video look truly professional.

🚀 Step 6: Combine with Audio or Music

Though Sora doesn’t yet create sound, you can easily add AI-generated voices or music using:

- ElevenLabs for voiceovers

- Soundraw.io for background scores

- Adobe Podcast AI for narration clarity

🧩 Sora Prompt Examples That Go Viral

Here are some real creator-tested prompts that deliver jaw-dropping results:

| Prompt | Output Type | Use Case |

|---|---|---|

| “A fantasy forest glowing with bioluminescent plants at night, 4K cinematic lighting” | Landscape | Music videos / intros |

| “Robot dancing on a neon-lit street under rain, reflective surfaces, cyberpunk vibes” | Scene | Sci-fi shorts |

| “Ancient warrior walking through desert storm, slow motion, 24fps cinematic” | Character | Storytelling reels |

| “Close-up of coffee being poured in slow motion, steam rising, realistic lighting” | Product | Ad visuals |

🔮 How Professionals Use Sora in 2025

Creators, filmmakers, and brands have started using Sora in clever ways.

🎥 1. YouTubers & Influencers

- Create cinematic intros

- Generate storytelling shorts

- Visualize fantasy or tech ideas

💼 2. Businesses

- Generate product demo videos

- Create concept ads

- Pitch marketing campaigns faster

🧑🏫 3. Educators

- Build science explainers

- Animate historical events

- Create visual lessons

🎮 4. Game Developers

- Create realistic concept animations

- Visualize gameplay ideas

- Generate cinematic trailers

💡 Pro Tips to Get the Most Out of Sora

Here’s how you can create truly viral videos using Sora 👇

- Use strong adjectives – cinematic, emotional, detailed, volumetric lighting.

- Add motion – “panning,” “tracking,” or “slow zoom.”

- Limit prompt to 25–30 words – too long confuses the AI.

- Always mention camera type – gives realism.

- Re-generate 2–3 times – Sora improves slightly each time.

- Add storytelling tone – describe the scene like a movie director.

- Keep subject clear – one focus per scene (person/object).

- Avoid vague prompts – “beautiful scenery” is too broad.

Sora vs Other AI Video Tools + Real Creator Insights (2025)

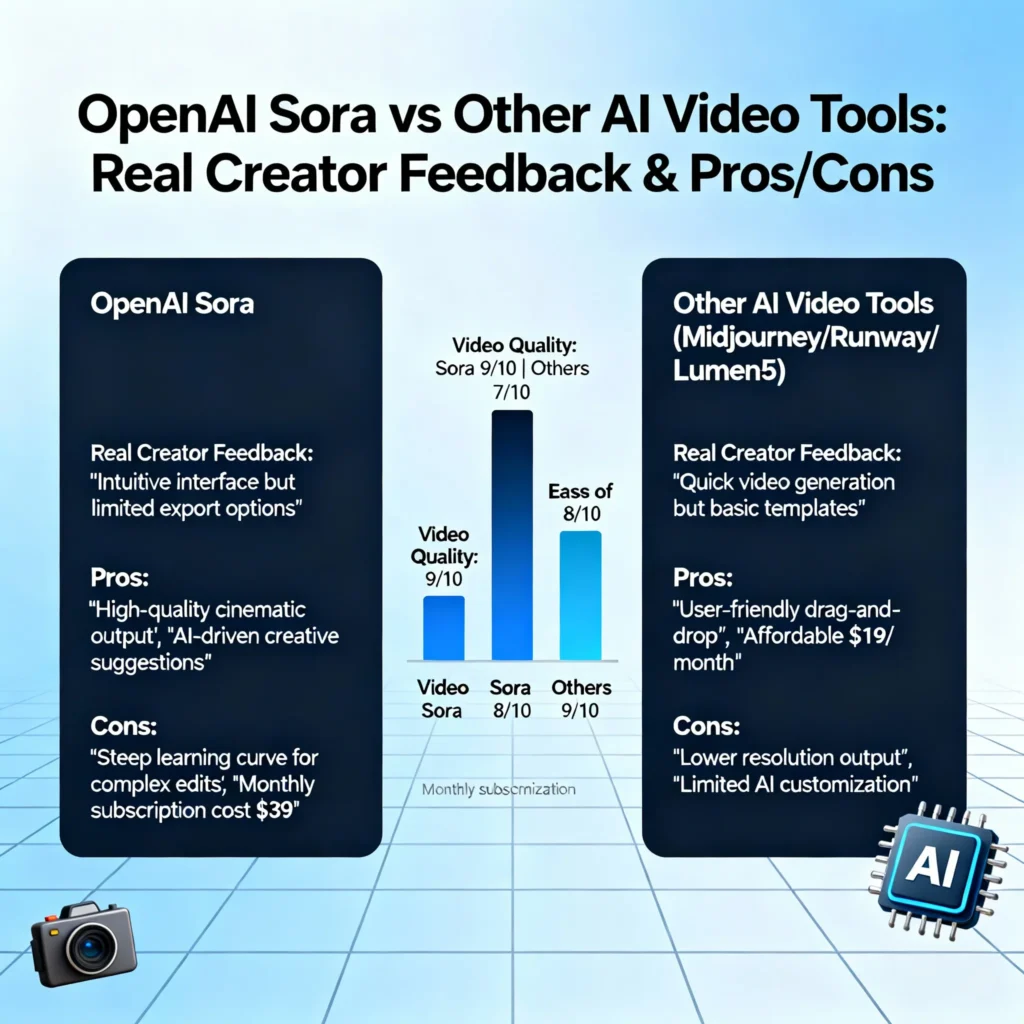

⚔️ OpenAI Sora vs Other AI Video Tools

The AI video landscape in 2025 is filled with competition — tools like Runway Gen-2, Pika Labs, Synthesia, and Pictory all have their own fanbases.

But the question is: How does Sora actually compare?

Let’s break it down 👇

🥇 1. Sora vs Runway Gen-2

| Feature | OpenAI Sora | Runway Gen-2 |

|---|---|---|

| Realism | 9.5/10 (cinematic-quality visuals) | 7/10 (stylized, AI-like) |

| Video Length | Up to 2 minutes | 15 seconds |

| Motion Stability | Smooth, consistent | Slight flickering |

| Scene Understanding | Deep (can understand complex prompts) | Medium |

| Ease of Use | Beginner-friendly | Requires practice |

| Best For | Cinematic & storytelling videos | Short-form creative clips |

💡 Verdict:

Sora wins in realism, storytelling, and consistency.

Runway still has an edge for speed and accessibility (since it’s open to the public), but its visuals are clearly AI-generated.

🎬 2. Sora vs Pika Labs

| Feature | OpenAI Sora | Pika Labs |

|---|---|---|

| Output Quality | Ultra realistic | Artistic / stylized |

| Camera Control | Natural panning, zooms | Basic motion |

| AI Characters | Real human-like expressions | Cartoonish faces |

| Length Limit | Up to 120 sec | 10–12 sec |

| Creative Styles | Real + Fantasy + Anime | Mostly stylized or anime |

| Best For | Filmmakers, brands | Animators, meme creators |

💡 Verdict:

Pika Labs is fun and fast for social media content, but Sora’s depth, emotion, and realism are on another level.

🧠 3. Sora vs Pictory AI

| Feature | Sora (OpenAI) | Pictory AI |

|---|---|---|

| Video Creation Type | Text-to-video generation | Text-to-slideshow / narration |

| Customization | Cinematic control | Templates & stock footage |

| Output Look | Real-world video | Presentation-style |

| Voice Sync | Coming soon | Built-in |

| Use Case | Creative films, ads, animation | Business explainers |

💡 Verdict:

Pictory is great for marketers and educators, but Sora is for storytellers, filmmakers, and visual artists.

🏁 4. Sora vs Synthesia

| Feature | Sora | Synthesia |

|---|---|---|

| Output Type | Full scene video | Talking-head AI avatars |

| Creative Freedom | Unlimited | Restricted to templates |

| Realism | Cinematic | Studio-like |

| Use Case | Creative storytelling | Corporate videos |

💡 Verdict:

Synthesia is corporate, Sora is creative.

For creators and entertainment, Sora wins by a landslide.

🌍 How Creators Are Using Sora in 2025

Let’s see how professionals and influencers are actually using Sora to grow their careers 👇

🎥 1. YouTube & Short-Form Creators

Sora has completely changed how people create video content for YouTube Shorts, Reels, and TikTok.

Creators use it to:

- Make short sci-fi stories

- Create fantasy or emotional skits

- Visualize motivational narrations

- Build realistic AI-generated B-rolls

Example:

A YouTuber can write a script in ChatGPT → generate visuals with Sora → add voiceover → and post it as a cinematic short in an hour.

That used to take days before Sora.

💼 2. Marketing & Business Agencies

Marketers now use Sora to:

- Make product ads before prototypes exist

- Create brand stories for campaigns

- Visualize explainer videos in minutes

Example Prompt:

“A premium smartwatch floating over a dark marble background, with glowing blue energy lines moving around it.”

That’s a ready-made product ad — no studio, no camera, no crew needed.

🧑🏫 3. Educators and Researchers

Educators use Sora for:

- Scientific visualizations (e.g., cell division, space exploration)

- Animated history lessons

- Language learning videos

It’s even being tested in universities as a visual learning aid for complex concepts.

🎬 4. Filmmakers & Indie Artists

Filmmakers use Sora for concept visualization — creating full storyboard sequences before real shooting starts.

They can:

- Preview camera angles

- Test VFX ideas

- Pitch movie concepts visually to investors

This is revolutionizing indie filmmaking.

🎮 5. Game Developers

Developers use Sora to:

- Generate cinematic cutscenes

- Visualize gameplay concepts

- Create moodboards for storylines

Before, game trailers required studios.

Now, one person with Sora and a good idea can produce a full cinematic trailer in hours.

💰 How to Monetize Your Sora Skills

Here’s where it gets exciting — you can earn real money using Sora.

Let’s look at the best ways 👇

💼 1. Freelance AI Video Creator

Offer your services on:

- Fiverr

- Upwork

- Freelancer.com

💸 Earning Potential: $50–$500 per project

Popular gigs:

- “AI cinematic video creator”

- “Custom Sora-generated video for brand ads”

- “Text to video explainer using AI”

🎥 2. YouTube Automation

Use Sora to create faceless YouTube channels:

- Motivational stories

- AI documentaries

- Sci-fi shorts

- Nature visuals with narration

💸 Earning Potential: $1000+/month through AdSense & affiliate links

🏢 3. Brand Collaborations

Businesses pay creators to make AI concept ads and prototype visuals.

Example:

A fashion brand hires you to create futuristic ad concepts — using Sora, you can deliver cinematic ads in hours.

🧠 4. Course Creation & Teaching

If you master Sora, you can create:

- Online courses (“Learn AI Video Generation with Sora”)

- Tutorials for YouTube

- Consulting for creators

💸 Earning Potential: $500–$10,000+ depending on audience.

🧩 5. Sell AI Visual Assets

You can export Sora clips and sell them on:

- Envato

- MotionArray

- Pond5

- Artgrid

People pay for B-rolls, transitions, and cinematic scenes.

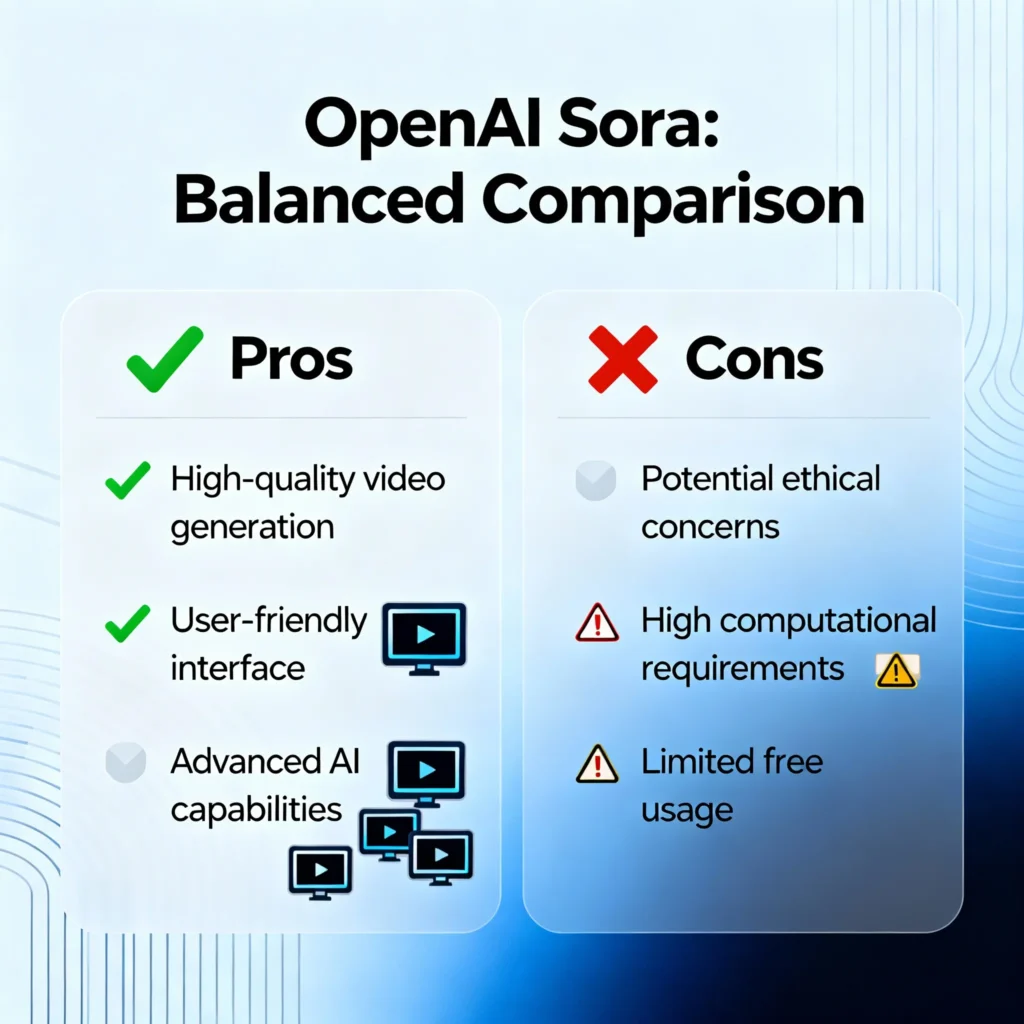

⚖️ Pros and Cons of OpenAI Sora

Every revolutionary tool has its strengths and weaknesses — let’s analyze Sora honestly 👇

✅ Pros

- Unmatched Realism – The most human-like AI video generation ever.

- Cinematic Motion – Realistic camera pans, zooms, and scene transitions.

- Scalable for All – From short clips to full movie sequences.

- Creative Freedom – Any style, any tone — from anime to hyper-realism.

- Time-Saving – Reduces production time by 90%.

- Integration – Works with ChatGPT & DALL·E seamlessly.

- Future-Proof – AI video is the next frontier of content.

❌ Cons

- Not Public Yet – Still limited to beta testers.

- Heavy Computation – Requires strong GPUs (cloud-based).

- Lacks Audio Sync – Sound support coming soon.

- Copyright Unclear – Ownership laws still evolving.

- Ethical Risks – Deepfake misuse is a serious concern.

🎨 Real Creator Feedback (2025)

Here’s what top creators say about Sora:

🎥 “Sora feels like having a Hollywood studio in your laptop.”

— Ethan James, Filmmaker (NYC)

🎬 “It understands my script better than my old editing team.”

— Lara K., YouTuber (1.2M Subs)

🧠 “For education, it’s game-changing. Students visualize complex topics instantly.”

— Dr. Olivia Patel, EdTech Researcher

💼 “We replaced 5 days of ad production with 5 minutes in Sora.”

— Aditya Mehra, Marketing Director

The Future of AI Filmmaking with OpenAI Sora (Ethics, Challenges & Beyond 2025)

⚖️ Ethical & Legal Challenges in AI Video Creation

AI-generated video content is revolutionary — but it also raises serious ethical concerns.

With tools like OpenAI Sora, the line between “real” and “synthetic” visuals is fading faster than ever.

Let’s explore the biggest concerns 👇

⚠️ 1. Deepfake & Misuse Concerns

Deepfake videos have already caused social panic, political manipulation, and personal reputation damage.

The power of Sora — creating near-realistic human videos — could be misused for:

- Fake celebrity or political clips

- Fraudulent advertising

- Harassment or revenge content

🧠 Solution:

OpenAI has strict internal guardrails. Every Sora-generated clip contains invisible AI watermarking to identify authenticity.

Future laws will likely require disclosure labels like:

“This video was created using AI.”

🧾 2. Copyright and Ownership Issues

Who owns the video?

- The prompt creator?

- The AI model owner (OpenAI)?

- Or the subject appearing in it?

Currently, AI laws differ by region.

In 2025, the U.S. Copyright Office recognizes AI-assisted works — but not fully AI-generated ones — as copyrighted if there’s substantial human contribution.

So, creators should:

- Keep prompt logs

- Add unique creative direction

- Document editing and post-production steps

💡 This ensures legal protection of your Sora-created works.

🧬 3. Data Privacy & Training Ethics

Another concern is where the AI learns from.

If an AI model is trained on copyrighted videos without permission, it can lead to legal disputes.

OpenAI claims Sora uses:

- Licensed video datasets

- Public domain footage

- Openly available internet data

But global debate continues about the fair use principle in AI training.

🔍 4. Authenticity & Truth in Media

AI-generated content can mislead audiences.

News outlets and advertisers must ensure that viewers can differentiate synthetic content from real footage.

That’s why many organizations are adopting:

- AI authenticity tags

- Digital provenance frameworks (C2PA)

- Blockchain-based content tracking

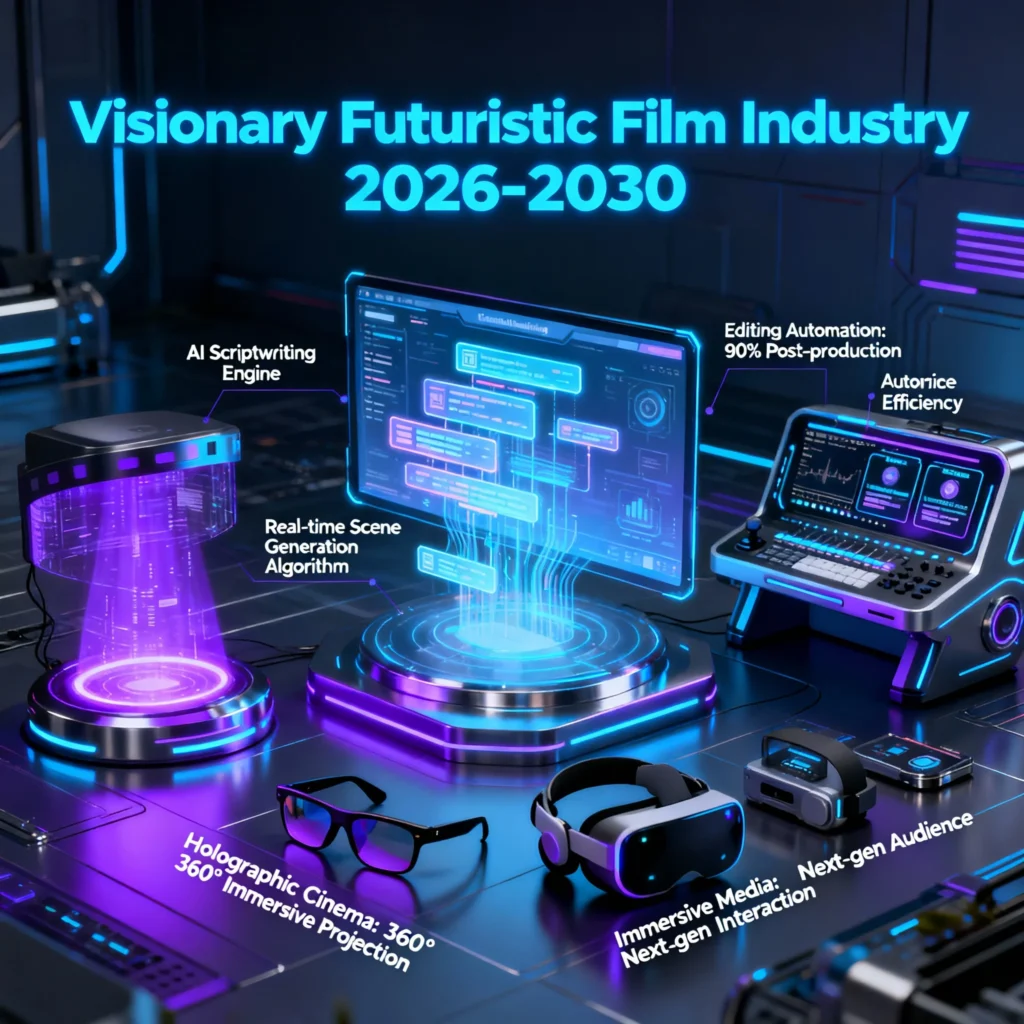

🌠 The Future of AI Filmmaking (2026–2030 Predictions)

Now, let’s look ahead — how will AI filmmaking evolve in the next 5 years?

🚀 1. Real-Time Text-to-Video Generation

By 2026–2027, AI models like Sora will create videos instantly as you type prompts.

Imagine typing:

“A sunset over Tokyo, camera zooms into a ramen shop with steam rising.”

And seeing it rendered in 5 seconds.

That’s the next big leap: real-time AI filmmaking.

🎥 2. AI Co-Directors and Virtual Film Studios

In 2028+, creative studios will have “AI co-directors” that:

- Suggest camera angles

- Manage lighting and composition

- Edit and color-grade footage automatically

Small indie teams will produce Netflix-level films — all inside a computer.

🧠 3. Personalized AI Movies

Every viewer could watch a customized version of a film.

Example:

- You prefer emotional tone → Sora adjusts mood and color.

- You love sci-fi → Sora adds futuristic visuals.

Entertainment will become personalized storytelling.

🪄 4. AI + Human Collaboration Becomes Standard

AI won’t replace filmmakers — it’ll amplify them.

Writers, editors, and directors will use AI for:

- Scene ideation

- Visual simulation

- Budget-efficient production

It’s the Adobe moment for film — empowering, not replacing creators.

💰 5. AI Cinema Will Create a New Economy

Entire new industries will emerge:

- AI movie startups

- Virtual actors and influencers

- Synthetic ad agencies

- AI-based media licensing platforms

AI-generated actors will star in commercials, music videos, and interactive entertainment.

🧩 Real-World Industry Adoption (2025 & Beyond)

Let’s explore how different sectors are already integrating Sora and similar tools 👇

🎬 1. Hollywood & Entertainment

- Studios use AI to pre-visualize scenes before real shooting.

- AI assists in storyboarding and animatics.

- Some directors even use Sora for proof-of-concept short films.

🎞️ Example:

An indie studio recreated Blade Runner–style scenes entirely using Sora — saving $250,000 in production cost.

📺 2. Advertising & Branding

AI ads are now mainstream.

Brands like Nike, Samsung, and Coca-Cola experiment with AI-generated campaigns for quick social launches.

Why?

- Faster turnaround

- Cost efficiency

- Endless creative variations

Example Prompt:

“A bottle of Coca-Cola floats in zero gravity surrounded by neon lights and happy faces.”

That’s a viral ad — without filming anything.

🎓 3. Education & Training

AI videos are transforming online education.

Teachers now use Sora to create:

- Animated science experiments

- Historical re-enactments

- 3D visual storytelling for kids

💡 AI videos improve retention by up to 80% compared to text-only lessons.

🏥 4. Healthcare & Simulation

Medical institutions use AI video tools for:

- Surgery training simulations

- Patient education videos

- Anatomy visualizations

Doctors can demonstrate complex procedures using Sora-generated visuals before actual operations.

🏗️ 5. Architecture & Engineering

Architects now visualize spaces in seconds using AI text prompts:

“Modern apartment with marble flooring, open balcony, and sunset lighting.”

Sora converts that into 4K cinematic walkthroughs — perfect for client presentations.

🔥 The Human Side of AI Video Creation

Behind every powerful AI video lies one thing that AI can’t replace — emotion.

Sora can replicate light, texture, and movement.

But human vision adds meaning, story, and empathy.

The best creators of 2025 and beyond will blend:

- Human emotion ❤️

- AI efficiency ⚙️

- Ethical responsibility ⚖️

That’s the formula for timeless storytelling.

🧠 Expert Predictions on OpenAI Sora

🎞️ “By 2030, AI video generation will be as normal as smartphone cameras are today.”

— Timothy Yang, Tech Futurist

💡 “Sora will redefine the future of visual storytelling.”

— Rachel Lin, Film Director

🚀 “Soon, we won’t need cameras — creativity will be enough.”

— Elon D., Visual AI Researcher

💬 FAQs – OpenAI Sora 2025

❓ 1. Is OpenAI Sora free to use?

Not yet. As of 2025, Sora is available only to select beta users and researchers.

Public access is expected later this year.

❓ 2. Can I make money using Sora?

Yes — through freelance work, YouTube videos, brand ads, and digital assets.

Just ensure you respect AI-generated content rules.

❓ 3. Does Sora include audio and voice features?

Currently, Sora generates silent videos.

However, OpenAI plans to integrate audio-to-scene synchronization in upcoming updates.

❓ 4. Is Sora available worldwide?

Initially launched in the U.S., UK, and Canada.

Global rollout expected by late 2025.

❓ 5. What makes Sora different from Runway or Pika Labs?

Sora is built for cinematic realism — longer scenes, emotional storytelling, and multi-object motion understanding.

Others focus on short, stylized videos.

❓ 6. Will AI replace human filmmakers?

No — AI will be a creative collaborator.

It speeds up production, but storytelling still requires human imagination.

❓ 7. Can I use Sora videos commercially?

Yes, once OpenAI finalizes licensing policies.

Always read the Terms of Use before commercial publishing.

❓ 8. How can I join the Sora beta?

Sign up at sora.chatgpt.com and join the waitlist.

Beta invites are gradually expanding through 2025.

❓ 9. Can Sora make full movies?

Yes — Sora can generate multiple linked clips that form cohesive storylines.

Filmmakers are already experimenting with short AI films.

❓ 10. What’s next after Sora?

OpenAI plans to merge Sora with ChatGPT Vision + Voice to enable full text-to-film experiences — where users write a story and watch it unfold in real time.

🌟 Final Thoughts – Should You Use Sora in 2025?

If you’re a creator, marketer, or filmmaker — YES.

Sora is not just another AI tool; it’s a new medium of creativity.

It blends imagination with technology in a way humanity has never experienced before.

Just remember:

“AI doesn’t kill creativity — it supercharges it.”

Master it early, and you’ll lead the AI filmmaking revolution.

Pingback: Grok-Code-Fast-1 Guide 2025 | Beginner to Pro with Grok AI -

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.